"Tomorrow, if you'll promise not to divulge it to a human soul, I'll tell you a great secret." [1]

(Unknown, attributed to Gen. George E. Pickett)

Introduction¶

Authorship attribution empirically discovers the likely author

of a work or works of disputed or unknown authorship.

Single-source authorship attribution applies to domains where

only one author of interest has relevant data. Single-source

authorship attribution is also called authorship verification

or, more generally, single-class classification. This task is

more challenging than multi-source authorship attribution since

the results are inherently more ambiguous. While it is clear to

say, “author x wrote work y with a

likelihood of z, and author q wrote it

with a likelihood of 1 - z,” saying “author

x wrote work y with a likelihood of

z” lacks this comparative context, making the

interpretation less straightforward.

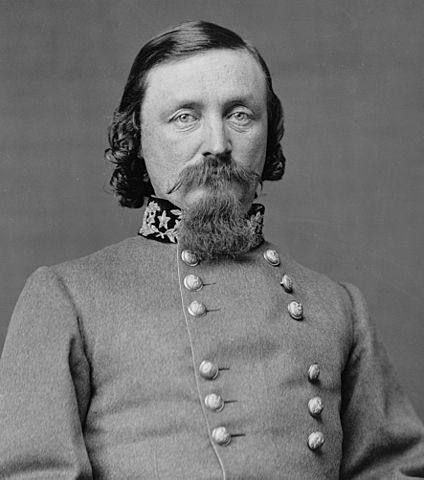

Authorship verification, referred to by Koppel et al. as "the fundamental problem of authorship attribution" [2], is the challenge at the heart of the case of General George E. Pickett's love letters to his wife, LaSalle Corbell Pickett, presented in The Heart of a Soldier: As Revealed in the Intimate Letters of Genl. George E. Pickett, C.S.A., and the second edition Soldier of the South: General Pickett’s War Letters to His Wife. There is an ongoing dispute [3][4][5] as to whether or not the letters presented therein are authentic or falsified by the purported author’s wife, LaSalle Corbell Pickett, as part of a Lost Cause [6] campaign or hagiography of her late husband. LaSalle Corbell Pickett has a wealth of published works [7], whereas the General has virtually no known written works.

.jpg)

Figure 1: George Pickett. Source: Wikimedia

Commons,

https://commons.wikimedia.org/w/index.php?curid=3158912. License: Public Domain.

Figure 2: LaSalle Corbell Pickett. By Pickett,

La Salle Corbell, 1848-1931. Source: Wikimedia Commons,

https://commons.wikimedia.org/w/index.php?curid=112447021. License: Public Domain.

Objectives¶

This project aims to resolve the historical controversy regarding the authorship of the love letters attributed to General George Pickett, determining whether his wife, LaSalle Corbell Pickett, actually wrote them. Some historians [3][4][5] have raised the idea that LaSalle Corbell Pickett falsified the love letters she published under her husband’s name. Her authorship or non-authorship will be determined using traditional statistical and modern deep learning models to analyze and compare the stylistic features of the love letters and LaSalle's known works. We will assume the negative case (LaSalle did not write the letters) to indicate George Pickett authored them, as third possible authors are highly unlikely given the intimate nature of the letters.

Koppel et al. [2] and M. Kocher and J. Savoy [8] have conducted previous work in authorship verification using ‘imposter’ texts. In this method, imposter texts are selected based on their similarity to the known work of a given author. We then calculate a distance measure between the questioned text, the known work, and the imposter texts. The questioned work is attributed to the author if its distance to the known work is under a predetermined threshold. Otherwise, it is labeled indeterminate or attributed to another author, depending on further thresholds and the problem space.

Both models will be compared against the performance of a Gaussian Naive Bayes classifier trained on the same feature set. Gaussian Naive Bayes is a simple but often effective probabilistic classifier that works well for continuous features, making it suitable for stylometric analysis, including this analysis, which uses continuous features as in both inspirational studies [8, p. 261][9, p. 1].

The first model will be based on the SPATIUM-L1 algorithm [8] (see SPATIUM-L1 Implementation section) implemented by M. Kocher and J. Savoy. However, it will diverge in several ways to accommodate our different datasets and for experimental purposes. The original SPATIUM-L1 algorithm was evaluated on the PAN CLEF 2014 dataset - a larger and more variation-controlled dataset than used here. This foundational model was chosen for its proven effectiveness in authorship verification across multiple languages and genres and ease of application.

The second model will be a modern deep learning model using similar features and intuitions to those used in the Gaussian-Bernoulli Deep Belief Network (DBN) implemented by Brocardo et al. [9] (see DNN Implementation section). However, our model will be a vanilla feed-forward deep neural network (Multi-layered perception) for simplicity and clarity in model comparison. Again, in this instance, our dataset is markedly different than that used in the inspirational paper. The GB-DBN model was evaluated on a large dataset of Twitter feeds and Enron emails.

Determining the actual authorship of these letters will clarify historical narratives and contribute to the accuracy of Civil War history. Academically, this research will fill a gap in authorship verification studies, particularly in the context of forgery detection rather than plagiarism detection, which is under-researched [9, p. 1][10][11, p. 1]. This sparsity makes new research in authorship verification meaningful.

Dataset¶

A diverse dataset has been compiled for this authorship attribution project. It includes the known works of LaSalle Corbell Pickett, the disputed love letters, and a selection of 'imposter' texts chosen for their authors' socio-demographic similarity to LaSalle Corbell Pickett or George Pickett. This dataset will be used to train and test the text classification models, comparing the efficacy of a traditional statistical model and a modern deep learning model.

A NOTE ON THE CONTENTS THIS OF DATASET: Given that these works are written by Southern confederates during the time of the American Civil War, the contents are highly objectionable, consisting of xenophobic and anti-black rhetoric, pro-slavery arguments, and eye-dialect, among other problematic speech. Please inspect this data with caution.

Acquisition¶

The texts were meticulously and rigorously sourced from reputable online archives, including The Library of Congress, Internet Archive, HathiTrust, and Project Gutenberg. For a comprehensive list of the texts, their sources, and acquisition dates, please refer to the Appendix (see Appendix A).

Size and Data Types¶

The dataset consists of textual data extracted from LaSalle Corbell Pickett's published works, the disputed love letters, and imposter texts from her and her husband's contemporaries. The data types are plain text (.txt), XML, and HTML files. The total word count of the processed texts is approximately 1,458,093. A wordcount breakdown is shown in the table below:

TABLE OF DATASET WORD COUNTS

| Dataset | Word Count |

|---|---|

| LaSalle's works | 289,136 |

| Love letters | 40,316 |

| Imposter works | 1,128,641 |

| TOTAL | 1,458,093 |

Imposter Text Selection¶

The imposter texts were chosen from the works of 'lookalike' authors to LaSalle Corbell Pickett and General George E. Pickett. The LaSalle imposters were well-educated female Southern authors who wrote during and immediately after the American Civil War. These included Mary Boykin Chesnut, Augusta J. Evans, Grace Elizabeth King, and Helen D. Longstreet - two of which are considered Lost Cause writers [12][13]. The General's imposters comprised other Confederate military officers, including Robert E. Lee, James Longstreet, and Jubal Anderson Early.

Evaluation Methodology¶

The statistical model (SPATIUM-L1) and the deep learning model (DNN) will be evaluated using their F1 scores and their False Acceptance and False Rejection Rates (FAR & FRR). From here on we will adopt some standard symbology for reasons of ease. The symbol A is a label/prediction indicating a work was written by the author under consideration (LaSalle Corbell Pickett, in this study), $\neg$A is a label/prediction indicating a work was not written by said author, and Q is a label indicating a work under question (the love letters, in this study).

F1 Score¶

A model's precision is the ratio of true positive results to all positive results predicted by the model. Its recall is the ratio of true positive results among all real positive examples in the dataset. Both measures become less reliable in cases of class imbalance, which is the case in this study. As shown in the table above, we have a roughly 28:7:1 ratio of classes $\neg$A:A:Q. The F1-score is the harmonic mean between precision and recall, providing a balanced measure of the two that is robust to issues of class imbalance [20].

F1 Score Calculation:

$$ \text{F1-Score} = 2 \times \frac{\text{Precision} \times \text{Recall}}{\text{Precision} + \text{Recall}} $$

FAR & FRR¶

The following is a description of the FAR, FRR, and EER by Brocardo et al.:

(1) false acceptance rate (FAR), consists of measuring the probability of falsely recognize [sic] someone as a genuine person; (2) false rejection rate (FRR), consists of measuring the probability of rejecting a genuine person; and (3) equal error rate (EER), consists of determining the operating point where the FRR and FAR have a similar value. [9, p. 2]

Equal Error Rate Calculation:

First, the FAR and FRR at various threshold levels for both models are determined, and then the threshold where the FAR and FRR are roughly equivalent is identified to find the EER.

FAR & FRR Caclulation [27]:

$$ \text{FAR} = \frac{\text{number of false positives}}{\text{number of false positives} + \text{number of true negatives}} $$ $$ \text{FRR} = \frac{\text{number of false negatives}}{\text{number of false negatives} + \text{number of true positives}} $$

These evaluation methods are industry standards and appropriate for judging these models' correctness and robustness. They will allow for a precise measure of our models' effectiveness in authorship attribution. These metrics will comprehensively evaluate the models, ensuring a balanced assessment of their capabilities.

Evaluation Against GNB Baseline¶

Both F1 Scores and FAR/FRR rates will be calculated from the predictions of GNB classifiers trained on each model's feature set. This will allow two baselines to compare the classical statistical approach (model inspired by SPATIUM-L1) and the modern deep learning approach (model inspired by the GB-DNN work). It should be noted that the F1-score will need to be calculated from the set of predictions that were not classified "don't know" [8, p. 261] in the case of the SPATIUM-L1 inspired model.

Implementation¶

Preprocessing¶

Data Cleaning¶

The data cleaning process involved multiple steps tailored to the specific formats in which the works were obtained. For texts from HathiTrust, plain text files were combined into single documents per work, removing irrelevant content like title pages and table contents. Each page contained preambles and markers, which were systematically removed. Optical Character Recognition (OCR) errors were corrected using a custom Python script leveraging SpaCy and spellchecker libraries, enabling interactive corrections and building a dictionary of corrections for future use. The text was then minified by combining all lines into a single line. For texts from the Internet Archive and the Library of Congress, XMLDjVu files were converted to text files and cleaned similarly to the HathiTrust texts. Project Gutenberg texts, provided in HTML, were manually cleaned by removing non-textual tags and extraneous content. Given the historical nature of these documents, the data cleaning process was lengthy and accounted for 80% of the time put into this study. For more details on data collection and cleaning, see Appendix B & C.

SPATIUM-L1¶

Data Normalization¶

Since the SPATIUM-L1 algorithm, known for its simplicity and explainability, relies on the most frequent terms, we will normalize our data by converting all characters to lowercase. This will ensure that differences in capitalization do not affect word counts. In addition to lowercasing, we will remove all non-alphabetic symbols (a-z). This diverges from the original SPATIUM-L1 work but was chosen because the source data contained many OCR-related artifacts in spurious punctuation and integer injection into the original text. Another divergence will be in the way we divide the corpus. The original algorithm treats an entire corpus as a single observation. Borrowing from the work by Brocardo et al. [9] and in the interest of obtaining statistically robust results for evaluation with F1-Scores and FAR/FRR, the SPATIUM-L1 algorithm will be adapted to work with chunks of 2,500 characters. This number is arbitrary but was chosen to balance the number of extractable frequent terms and the number of observations obtainable for the statistical robustness of the models' evaluations. This chunking strategy is supported by the authorship verification work of M. Koppel and J. Schler [11, p. 1]:

"... if the text we wish to attribute is long [...] then we can chunk the text so that we effectively have multiple examples which are known to be either all written by the author or all not written by the author."

The SPATIUM-L1 algorithm is straightforward, and no further normalization is needed.

Feature Extraction¶

Given our chunking strategy, our feature extraction will also be altered slightly. Rather than extracting the 200 most frequent terms, we will extract the 1,000 most frequent terms from the entire corpus (minus Q) and populate a vocabulary vector based on it with word occurrences per chunk. This is in an effort to capture a broader range of stylistic nuance and converge on a more accurate model of the author's style. The original SPATIUM-L1 paper used the top 200 most frequent terms in an effort to make the algorithm simple and explainable. Those are not the primary objectives of this research. This divergence also helps align the feature set with the capabilities of a deep neural network, which benefits from larger and more complex data inputs.

DNN¶

Data Normalization¶

Due to the OCR artifacts mentioned above and for reasons of comparability, the same normalization steps will be conducted on the data for the DNN model, namely, lowercasing, punctuation and integer removal, and 2,500 character chunking. This differs from the much smaller chunks used in the work by Brocardo et al. [9, p. 2] on which these features are based. Their research used chunk sizes of 140, 280, and 500. However, their corpus consisted of Twitter messages and emails, markedly shorter form works than those considered in this study.

Feature Extraction¶

Drawing from Brocardo et al.'s [9, pp. 3-4] work, the following features will be extracted:

-

Lexical features:

-

Feature 1 (

f1): Sparse term frequency vectors based on the top 1,000 most frequent terms in A + $\neg$A (this is to partially align with the SPATIUM-L1 algorithm) -

Feature 2 (

f2): Average word length in the chunk -

Feature 3 (

f3): Top 100 most frequent spaceless character 4-grams -

Feature 4 (

f4): Top 10 most frequent word bigrams

-

Feature 1 (

-

Structural features:

-

Feature 5 (

f5): Number of words in the chunk -

Feature 6 (

f6): Number of unique words in the chunk (vocabulary richness)

-

Feature 5 (

-

Stylistic features:

-

Feature 7 (

f7): Frequency of function words

-

Feature 7 (

These features will be normalized to real-valued inputs between 0 and 1 and be represented in a feature vector for input into the model.

##### Import necessary libraries

import os

import re

from nltk.probability import FreqDist, ConditionalFreqDist

import numpy as np

# Helper functions

def get_txt_filenames(input_folder):

"""Get all .txt file names in a directory."""

return [file for file in os.listdir(input_folder) if file.endswith(".txt")]

def combine_text_files(input_folder, files):

"""Combine all .txt files in an input directory into a single string."""

text = ""

for filename in files:

if filename.endswith(".txt"):

with open(

os.path.join(input_folder, filename), "r", encoding="utf-8"

) as file:

text = text + " ".join(file.readlines()) + " "

return text

def remove_non_alpha_symbols(input_string):

"""Remove all non-alphabetic (a-z) and non-whitespace symbols."""

# Based on limasxgoesto0's answer on StackOverflow at https://stackoverflow.com/a/22521156 [14]

regex = re.compile('[^a-zA-Z\s]')

alpha_string = regex.sub('', input_string)

# End of adapted code

# Reduce multiple spaces to one

return ' '.join(alpha_string.split())

def get_chunks(input_string, chunk_size=2500):

"""Convert a string into an array of contiguous substrings of length `chunk_size`."""

# Generate chunks

chunks = [input_string[x:x+chunk_size] for x in range(0, len(input_string), chunk_size)]

# Pad the final chunk if shorter than 2500

chunks[-1] += " " * (chunk_size - len(chunks[-1]))

return chunks

def get_n_most_frequent_words(input_string, n=1000):

"""Get the frequencies of the top `n` most frequent words in the input string."""

# Based on the NLTK docs at https://www.nltk.org/api/nltk.probability.FreqDist.html [15]

fd = FreqDist(word for word in input_string.split(' ')).most_common(n)

# End of adapted code

return {tup[0]: tup[1] for tup in fd}

def get_norm_vector(input_vector, max_val=None):

"""Normalize each feature in an input vector to a scale between 0 and 1 using a maximum normalization scheme

if `max_val` is assigned, or by dividing by the total length of the input vector if not [9]."""

if not max_val:

return np.array(input_vector) / len(input_vector)

elif isinstance(max_val, int) or isinstance(max_val, float):

return np.array([np.array(input_vector) / max_val])

else:

raise Exception("max_value of wrong type.")

def get_sparse_word_freq_vector(input_string, vocab):

"""Get the sparse vocabulary vector representing frequencies of vocab words found in an input string."""

# Based on the NLTK docs at https://www.nltk.org/api/nltk.probability.FreqDist.html [15]

fd = FreqDist(word for word in input_string.split(' '))

# End of adapted code

return [fd[word] if word in fd else 0 for word in vocab]

def get_max_word_length(input_string):

"""Get the length in characters of the longest word in an input string."""

words = input_string.split()

return max([len(word) for word in words])

def get_avg_word_length(input_string):

"""Get the average word length of an input string."""

# Based on Tim Pietzcker's answer on StackOverflow at https://stackoverflow.com/a/12761576 [16]

words = input_string.split()

# End of adapted code

return sum(len(word) for word in words) / len(words)

def get_spaceless_char_ngrams(input_string, n=4):

"""Get all character ngrams of length `n` from an input string, minus spaces."""

no_spaces_string = ''.join(input_string.split())

return [no_spaces_string[i:i+n] for i in range(len(no_spaces_string) - (n - 1))]

def get_n_most_frequent_ngrams(ngrams_array, n):

"""Get the top `n` most frequent ngrams in the input array."""

assert(isinstance(ngrams_array, list))

# Based on the NLTK docs at https://www.nltk.org/api/nltk.probability.FreqDist.html [15]

fd = FreqDist(ngram for ngram in ngrams_array).most_common(n)

# End of adapted code

return {tup[0]: tup[1] for tup in fd}

def get_sparse_char_ngram_freq_vector(input_string, vocab):

"""Get the sparse vocabulary vector representing frequencies of vocab character ngrams found in an input string."""

spaceless_char_ngrams = get_spaceless_char_ngrams(input_string, n=len(list(vocab.keys())[0]))

# Based on the NLTK docs at https://www.nltk.org/api/nltk.probability.FreqDist.html [15]

fd = FreqDist(ngram for ngram in spaceless_char_ngrams)

# End of adapted code

return [fd[ngram] if ngram in fd else 0 for ngram in vocab]

def get_word_ngrams(input_string, n=2):

"""Get all word ngrams of length `n` from an input string."""

words = input_string.split()

return [' '.join(words[i:i+n]) for i in range(len(words) - (n - 1))]

def get_sparse_word_ngram_freq_vector(input_string, vocab):

"""Get the sparse vocabulary vector representing frequencies of vocab word ngrams found in an input string."""

word_ngrams = get_word_ngrams(input_string, n=len(list(vocab.keys())[0].split()))

# Based on the NLTK docs at https://www.nltk.org/api/nltk.probability.FreqDist.html [15]

fd = FreqDist(ngram for ngram in word_ngrams)

# End of adapted code

return [fd[ngram] if ngram in fd else 0 for ngram in vocab]

def get_num_of_words(input_string):

"""Get the number of words in an input string."""

return len(input_string.split())

def get_max_words_in_chunk(chunks_array):

"""Get the length in words of the longest chunk in an input array."""

return max([get_num_of_words(chunk) for chunk in chunks_array])

def get_num_unique_words(input_string):

"""Get the number of unique words in an input string."""

return len(set(input_string.split()))

def get_max_unique_words_in_chunk(chunks_array):

"""Get the length in unique words of the longest chunk in an input array."""

return max([get_num_unique_words(chunk) for chunk in chunks_array])

def get_function_words():

"""Get function words from the dataset obtainable at https://sequencepublishing.com/1/academic/academic.html."""

# Based on the same function words dataset [17] used in the GB-DBN paper by Brocardo et al. [9]

folder_path = 'data/English_Function_Words_Set'

files = get_txt_filenames(folder_path)

function_words = []

for filename in files:

if filename.endswith(".txt"):

with open(os.path.join(folder_path, filename), "r", encoding="ISO-8859-1" ) as file:

for line in file.readlines():

if line[:2] == "//":

continue

else:

# Some function words in the dataset are numerical (e.g. 0%, 1%, etc.)

# These were handled differently by the compiler, but since our study

# strips out integers from our dataset, we will do the same here, and

# avoid these special cases.

regex = re.compile('[^a-zA-Z\s]')

alpha = regex.search(line) == None

if alpha:

function_words.append(line[:-1])

return function_words

def get_freq_of_function_words(input_string):

"""Get the frequency of function words present in an input string."""

function_words = get_function_words()

max_length_in_words = max(len(word.split(' ')) for word in function_words)

# Based on the NLTK docs at https://www.nltk.org/api/nltk.probability.ConditionalFreqDist.html [18]

input_words_array = input_string.split(' ')

found_function_words = []

i = 0

while i < len(input_words_array):

found = False

for j in range(max_length_in_words, 0, -1):

candidate_function_word = ' '.join(input_words_array[i:i+j])

if candidate_function_word in function_words:

found_function_words.append((candidate_function_word in function_words, candidate_function_word))

i += j

found = True

break

if not found:

i += 1

# End of adapted code

return {tup[0]: tup[1] for tup in ConditionalFreqDist(found_function_words)[True].most_common()}

def get_sparse_function_word_freq_vector(input_string, vocab):

"""Get the sparse vocabulary vector representing frequencies of function words found in an input string."""

fd = get_freq_of_function_words(input_string)

return [fd[vocab_word] if vocab_word in fd else 0 for vocab_word in vocab]

### Data normalization for SPATIUM-L1 and DNN models is the same, namely:

### 1. Lowercase

### 2. Remove all non-alphabetic symbols (a-z)

### 3. Divide into chunks of 2,500 characters

# LaSalle's works

folder_A = 'data/cleaned/lasalle_corbell_pickett'

# Imposter works

folder_notA = 'data/cleaned/imposters'

# Love Letters

folder_Q = 'data/cleaned/love_letters'

# Read in all files

files_A = get_txt_filenames(folder_A)

files_notA = get_txt_filenames(folder_notA)

files_Q = get_txt_filenames(folder_Q)

# Combine all files

text_A = combine_text_files(folder_A, files_A)

text_notA = combine_text_files(folder_notA, files_notA)

text_Q = combine_text_files(folder_Q, files_Q)

## Normalization step 1

# Convert all text to lowercase

text_A_lower = text_A.lower()

text_notA_lower = text_notA.lower()

text_Q_lower = text_Q.lower()

## Normalization step 2

# Remove all non-alphabetic (a-z) and non-whitespace symbols

text_A_alpha = remove_non_alpha_symbols(text_A_lower)

text_notA_alpha = remove_non_alpha_symbols(text_notA_lower)

text_Q_alpha = remove_non_alpha_symbols(text_Q_lower)

## Normalization step 3

# Divide into chunks of 2,500 characters

chunks_A = get_chunks(text_A_alpha)

chunks_notA = get_chunks(text_notA_alpha)

chunks_Q = get_chunks(text_Q_alpha)

## Feature extraction for SPATIUM-L1 *and* DNN models

# 1.1 Get the top 1,000 most common terms across the entire corpus `A + ¬A`

f1_vocab = get_n_most_frequent_words(text_A_alpha + text_notA_alpha)

# 1.3 `f1`: Sparse word frequency vectors

f1_A = [get_sparse_word_freq_vector(chunk, f1_vocab) for chunk in chunks_A]

f1_notA = [get_sparse_word_freq_vector(chunk, f1_vocab) for chunk in chunks_notA]

f1_Q = [get_sparse_word_freq_vector(chunk, f1_vocab) for chunk in chunks_Q]

## Feature extraction DNN model

# 2.1 Get max word length for normalization

f2_max_val = get_max_word_length(text_A_alpha + text_notA_alpha)

# 2.2 `f2`: Average word length in the chunk

f2_A = [get_avg_word_length(chunk) for chunk in chunks_A]

f2_notA = [get_avg_word_length(chunk) for chunk in chunks_notA]

f2_Q = [get_avg_word_length(chunk) for chunk in chunks_Q]

# 3.1 Get the top 100 most common character 4-grams across the entire corpus `A + ¬A`

f3_vocab = get_n_most_frequent_ngrams(get_spaceless_char_ngrams(text_A_alpha + text_notA_alpha), 100)

# 3.2 `f3`: Sparse character ngram frequency vectors

f3_A = [get_sparse_char_ngram_freq_vector(chunk, f3_vocab) for chunk in chunks_A]

f3_notA = [get_sparse_char_ngram_freq_vector(chunk, f3_vocab) for chunk in chunks_notA]

f3_Q = [get_sparse_char_ngram_freq_vector(chunk, f3_vocab) for chunk in chunks_Q]

# 4.1 Get the top 10 most common word bigrams across the entire corpus `A + ¬A`

f4_vocab = get_n_most_frequent_ngrams(get_word_ngrams(text_A_alpha + text_notA_alpha), 10)

# 4.2 `f4`: Sparse word ngram frequency vectors

f4_A = [get_sparse_word_ngram_freq_vector(chunk, f4_vocab) for chunk in chunks_A]

f4_notA = [get_sparse_word_ngram_freq_vector(chunk, f4_vocab) for chunk in chunks_notA]

f4_Q = [get_sparse_word_ngram_freq_vector(chunk, f4_vocab) for chunk in chunks_Q]

# 5.1 Get max number of words in any chunk

f5_max_val = get_max_words_in_chunk(chunks_A + chunks_notA)

# 5.2 `f5`: Number of words in the chunk

f5_A = [get_num_of_words(chunk) for chunk in chunks_A]

f5_notA = [get_num_of_words(chunk) for chunk in chunks_notA]

f5_Q = [get_num_of_words(chunk) for chunk in chunks_Q]

# 6.1 Get max number of unique words in any chunk

f6_max_val = get_max_unique_words_in_chunk(chunks_A + chunks_notA)

# 6.2 `f6`: Number of unique words in the chunk (vocabulary richness)

f6_A = [get_num_unique_words(chunk) for chunk in chunks_A]

f6_notA = [get_num_unique_words(chunk) for chunk in chunks_notA]

f6_Q = [get_num_unique_words(chunk) for chunk in chunks_Q]

# 7.1 Get the frequency of all function words

f7_vocab = get_freq_of_function_words(text_A_alpha + text_notA_alpha)

# 7. `f7`: Sparse function word frequency vectors

f7_A = [get_sparse_function_word_freq_vector(chunk, f7_vocab) for chunk in chunks_A]

f7_notA = [get_sparse_function_word_freq_vector(chunk, f7_vocab) for chunk in chunks_notA]

f7_Q = [get_sparse_function_word_freq_vector(chunk, f7_vocab) for chunk in chunks_Q]

Feature Normalization¶

All extracted features will be normalized through a max-normalization strategy, where each feature in a set will be replaced with its ratio over the maximum instance of the same feature found in the training data as a whole, as outlined in the Brocardo et al.'s work [9, p. 3]. In addition, for the DNN model, all normalized feature sets per chunk will be concatenated for input into the model.

## Feature normalization

# Normalize each feature vector's elements to a scale between 0 and 1 using a maximum normalization scheme,

# i.e. replace every element by its ratio over the maximum value for the same feature over the corpus. [9, p. 3]

# 1. Get normalized sparse word frequency vectors

f1_A_norm = [get_norm_vector(vec) for vec in f1_A]

f1_notA_norm = [get_norm_vector(vec) for vec in f1_notA]

f1_Q_norm = [get_norm_vector(vec) for vec in f1_Q]

# 2. Get normalized average word lengths in chunks

f2_A_norm = [get_norm_vector(vec, max_val=f2_max_val) for vec in f2_A]

f2_notA_norm = [get_norm_vector(vec, max_val=f2_max_val) for vec in f2_notA]

f2_Q_norm = [get_norm_vector(vec, max_val=f2_max_val) for vec in f2_Q]

# 3. Get normalized sparse character ngram frequency vectors

f3_A_norm = [get_norm_vector(vec) for vec in f3_A]

f3_notA_norm = [get_norm_vector(vec) for vec in f3_notA]

f3_Q_norm = [get_norm_vector(vec) for vec in f3_Q]

# 4. Get normalized sparse word ngram frequency vectors

f4_A_norm = [get_norm_vector(vec) for vec in f4_A]

f4_notA_norm = [get_norm_vector(vec) for vec in f4_notA]

f4_Q_norm = [get_norm_vector(vec) for vec in f4_Q]

# 5. Get normalized counts of words in chunks

f5_A_norm = [get_norm_vector(vec, max_val=f5_max_val) for vec in f5_A]

f5_notA_norm = [get_norm_vector(vec, max_val=f5_max_val) for vec in f5_notA]

f5_Q_norm = [get_norm_vector(vec, max_val=f5_max_val) for vec in f5_Q]

# 6. Get normalized counts of unique words in chunks

f6_A_norm = [get_norm_vector(vec, max_val=f6_max_val) for vec in f6_A]

f6_notA_norm = [get_norm_vector(vec, max_val=f6_max_val) for vec in f6_notA]

f6_Q_norm = [get_norm_vector(vec, max_val=f6_max_val) for vec in f6_Q]

# 7. Get normalized sparse function word frequency vectors

f7_A_norm = [get_norm_vector(vec) for vec in f7_A]

f7_notA_norm = [get_norm_vector(vec) for vec in f7_notA]

f7_Q_norm = [get_norm_vector(vec) for vec in f7_Q]

## Features `f1` are ready to be consumed by the SPATIUM-L1 as they are.

## Concatenate all features (1-7) to form a single feature vector (denoted `F`) per chunk for processing by our DNN model.

# Obtain final per-chunk feature vectors for each corpus (A, ¬A, and Q) for consumption by the DNN model

# These three sets of feature vectors will be denoted: `F_A_norm`, `F_notA_norm`, and `F_Q_norm`

# 1 Construct `F_A_norm`

# `F_A_norm` consists of all `f{1-7}_A_norm` vector sets already constructed.

# Based on endolith's answer on StackOverflow at https://stackoverflow.com/a/9775378 [19]

F_A_norm = [np.concatenate((v1, v2, v3, v4, v5, v6, v7)) for v1, v2, v3, v4, v5, v6, v7 in zip(f1_A_norm, f2_A_norm, f3_A_norm, f4_A_norm, f5_A_norm, f6_A_norm, f7_A_norm)]

# End of adapted code.

# 2 Construct `F_notA_norm`

# `F_notA_norm` consists of all `f{1-7}_notA_norm` vector sets already constructed.

# Based on endolith's answer on StackOverflow at https://stackoverflow.com/a/9775378 [19]

F_notA_norm = [np.concatenate((v1, v2, v3, v4, v5, v6, v7)) for v1, v2, v3, v4, v5, v6, v7 in zip(f1_notA_norm, f2_notA_norm, f3_notA_norm, f4_notA_norm, f5_notA_norm, f6_notA_norm, f7_notA_norm)]

# End of adapted code.

# 3 Construct `F_Q_norm`

# `F_Q_norm` consists of all `f{1-7}_Q_norm` vector sets already constructed.

# Based on endolith's answer on StackOverflow at https://stackoverflow.com/a/9775378 [19]

F_Q_norm = [np.concatenate((v1, v2, v3, v4, v5, v6, v7)) for v1, v2, v3, v4, v5, v6, v7 in zip(f1_Q_norm, f2_Q_norm, f3_Q_norm, f4_Q_norm, f5_Q_norm, f6_Q_norm, f7_Q_norm)]

# End of adapted code.

Data Splitting¶

For comparability reasons, the data-splitting strategy will be

kept as close to identical as possible for both models. Since we

have a small number of 'observations' (chunks) -

A + ¬A = 618 + 2,419 = 3,037 - we will be using a

faux K-fold cross-validation strategy for the SPATIUM-L1

inspired work, and K-fold cross-validation for the DNN model.

This is for more statistically meaningful evaluations. The

'faux' in the former approach is because our 'folds' will be

generated randomly on each iteration. So, for K = 10 in our

case, rather than taking the test set to be 0-10% of the data on

the first iteration and then 10-20% on the second, the 10% slice

will be populated randomly, thus having a probability of some

overlap of test sets in two arbitrary folds m and

n. This choice was taken in order to allow for

author profile building, where we need to know the training

features and their labels at the training stage but still have a

random test set of features and labels for meaningful

evaluation.

Further, we will employ a stratified approach wherein the relative ratio of classes is roughly the same across folds or faux folds. This is helpful in datasets with class imbalances [20], which we have between A and ¬A at about 1:4. Finally, to ensure robust evaluations of our models, we will use a holdout test set, that will remain unseen throughout the training for a final evaluation [21].

The ratios of these splits will be somewhat arbitrary but follow the standard rule of thumb of 80/20 train/test. There will be ten folds, a standard number among machine learning practitioners. This means the data will first be divided into an 80/20 split of the training and holdout test set data. Then, the training data will be divided into ten folds, each maintaining roughly the same ratio of A and ¬A samples as in the entire corpus (1:4).

The final tally of chunks per data split is shown in the calculations below

txt

A_train = 618 * 0.8 ~= 494

notA_train = 2,419 * 0.8 ~= 1,935

A_holdout = 618 * 0.2 ~= 123

notA_holdout = 2,419 * 0.2 ~= 483

**FOLDS**

A_train_fold_n ~= 494 * 0.8 ~= 359

notA_train_fold_n ~= 1,935 * 0.8 ~= 1,548

A_test_fold_n ~= 494 * 0.2 ~= 98

notA_test_fold_n ~= 1,935 * 0.2 ~= 387

# Import needed libraries

from sklearn.model_selection import train_test_split

from sklearn.utils import shuffle

import numpy as np

def shuffle_features_and_targets(features, targets, seed):

"""Return a random permutation of input features and targets arrays where the same permutation is applied to both based on a random state input `seed`"""

# Based on the sckikit-learn docs at https://scikit-learn.org/stable/modules/generated/sklearn.utils.shuffle.html#shuffle [22]

features = shuffle(features, random_state=seed)

targets = shuffle(targets, random_state=seed)

# End adapted code

return (features, targets)

def make_feature_target_tup(pos_features, neg_features):

"""Create a features/target tuple of arrays from input arrays of positive and negative samples respectively"""

# Create the target vector for the positive cases `pos_features`

target_pos = np.array([1 for i in range(len(pos_features))])

# Create the target vector for the negative cases `neg_features`

target_neg = np.array([0 for i in range(len(neg_features))])

# Concatenate the features

features = np.concatenate((pos_features, neg_features))

# Concatenate the targets

target = np.concatenate((target_pos, target_neg))

return (features, target)

def train_test_split_m1(features, target, test_size, train_size, random_state):

"""Split input features and targets into four sets:

X_train_positive

X_train_negative

X_test

y_test

with the former two being sets positive and negative features respectively

and the latter two being the shuffled set of features and the like-shuffled

set of targets.

Relative class ratio is maintained in X_train_positive/X_train_negative,

X_test, and y_test"""

features, target = shuffle_features_and_targets(features, target, random_state)

## Split into train/test sets on an test_size:train_size ratio, keeping class proportion roughly the same

_X_train, X_test, _y_train, y_test = train_test_split(features, target, test_size=test_size, train_size=train_size, random_state=random_state, stratify=target)

# Sort x_train and _y_train based on targets to create profiles [8, p. 260]

# Based on Spataner's answer on Reddit at https://www.reddit.com/r/learnpython/comments/rzw5w6/comment/hrxq8s8/ [24]

sorted_indices = np.argsort(_y_train)

_X_train_sorted = _X_train[sorted_indices]

_y_train_sorted = _y_train[sorted_indices]

# Now _X_train_sorted and _y_train_sorted are sorted based on the targets

# The first part of the array will contain all the negative samples (0's)

# The second part of the array will contain all the positive samples (1's)

# Find the split point between negative and positive samples

split_point = np.sum(_y_train_sorted == 0)

# Create profiles for negative and positive samples

X_train_negative = _X_train_sorted[:split_point]

X_train_positive = _X_train_sorted[split_point:]

# End adapted code

return X_train_positive, X_train_negative, X_test, y_test

def get_far_and_fer_rates(preds, ground_truths):

"""Get the FAR (false acceptance rate) and the FRR (false rejection rate) as

described in the GB-DBN paper [9, p. 2]:

"(1) false acceptance rate (FAR), consists of measuring the probability of falsely

recognize someone as a genuine person;

(2) false rejection rate (FRR), consists of measuring the probability of rejecting

a genuine person; and

(3) equal error rate (EER), consists of determining the operating point where the

FRR and FAR have a similar value."

This function also returns an undecided rate (`ur`) as this is also an important metric.

"""

# Calculate total number of predictions

total_predictions = len(preds)

# Get the number of undecided predictions

undecided_count = sum(np.isnan(preds))

# Calculate the undecided rate

ur = undecided_count / total_predictions if total_predictions > 0 else 0

# cull out the np.nans in preds and the elements at matching indices in ground_truths

mask = np.isfinite(preds)

preds = preds[mask]

ground_truths = ground_truths[mask]

# Calculate the false acceptances (identifying a notA work as A)

num_fa = np.sum((preds == 1) & (ground_truths == 0))

# Calculate the false rejections (identifying an A work as notA)

num_fr = np.sum((preds == 0) & (ground_truths == 1))

# # Calculate false acceptance rate

# FP / (FP + TN)

tn = np.sum(ground_truths == 0)

far = num_fa / (num_fa + tn)

# # Calculate false rejection rate

# FN / (FN + TP)

tp = np.sum(ground_truths == 1)

frr = num_fr / (num_fr + tp)

return ur, far, frr

## Create features/target arrays

# `f1_A_norm`, and `f1_notA_norm`, for the SPATIUM-L1 model (denoted `m1`)

m1_features, m1_target = make_feature_target_tup(f1_A_norm, f1_notA_norm)

# `F_A_norm`, and `F_notA_norm`, for the DNN model (denoted `m2`)

m2_features, m2_target = make_feature_target_tup(F_A_norm, F_notA_norm)

# Use a random state seed to keep features and their targets in the same relative positions

SEED = 0

# Declare other important constants

TRAIN_SIZE = 0.8

HOLDOUT_SIZE = 1 - TRAIN_SIZE

## Split into train/holdout sets on an 4:1 ratio, keeping class proportion roughly the same

## Since the SPATIUM-L1 uses author 'profiles', we will want to randomize our test set selection and then reorder our training features

m1_X_train_positive, m1_X_train_negative, m1_X_holdout, m1_y_holdout = train_test_split_m1(m1_features, m1_target, test_size=HOLDOUT_SIZE, train_size=TRAIN_SIZE, random_state=SEED)

## Split into train/holdout sets on an 4:1 ratio, keeping class proportion roughly the same using the `stratify` argument in scikit-learn's train_test_split function [20]

m2_X_train, m2_X_holdout, m2_y_train, m2_y_holdout = train_test_split(m2_features, m2_target, test_size=HOLDOUT_SIZE, train_size=HOLDOUT_SIZE, random_state=SEED, stratify=m2_target)

Baseline performance¶

Gaussian Naive Bayes classification, a simple and efficient statistical model for textual classification, will serve as our baseline performance measure. It will be trained twice, once on each set of training features for the models under consideration. Then, each GNB model will make predictions on its respective holdout set to yield two relevant baselines. This approach allows us to assess the potential benefits of our dual approach directly and compare architectural and feature extraction differences.

# Adapted from the Scikit-Learn docs found at https://scikit-learn.org/stable/modules/naive_bayes.html [26]

# Import needed libraries

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import f1_score

# Fit a Guassian Naive Bayes classifier to our SPATIUM-L1 feature set and predict

m1_gnb_baseline = GaussianNB()

m1_gnb_y_preds = m1_gnb_baseline.fit(

np.concatenate((m1_X_train_positive, m1_X_train_negative)),

[1 if i < len(m1_X_train_positive) else 0 for i in range(len(m1_X_train_positive)+len(m1_X_train_negative))]).predict(m1_X_holdout)

# Fit a Guassian Naive Bayes classifier to our DNN feature set and predict

m2_gnb_baseline = GaussianNB()

m2_gnb_y_preds = m2_gnb_baseline.fit(

m2_X_train,

m2_y_train).predict(m2_X_holdout)

# End adapted code

print("Gaussian Naive Bayes performance of SPATIUM-L1 features.")

# Calculate F1-Score

m1_gnb_f1 = f1_score(m1_y_holdout, m1_gnb_y_preds)

# Calculate FEE metrics

ur, far, frr = get_far_and_fer_rates(m1_gnb_y_preds, m1_y_holdout)

# Output

print(f"ur: {ur}, far: {far}, frr: {frr}")

print(f"f1-score: {m1_gnb_f1}")

print("\nGaussian Naive Bayes performance of DNN features.")

# Calculate F1-Score

m2_gnb_f1 = f1_score(m2_y_holdout, m2_gnb_y_preds)

# Calculate FEE metrics

ur, far, frr = get_far_and_fer_rates(m2_gnb_y_preds, m2_y_holdout)

# Output

print(f"ur: {ur}, far: {far}, frr: {frr}")

print(f"f1-score: {m2_gnb_f1}")

Gaussian Naive Bayes performance of SPATIUM-L1 features. ur: 0.0, far: 0.16262975778546712, frr: 0.03125 f1-score: 0.7100591715976331 Gaussian Naive Bayes performance of DNN features. ur: 0.0, far: 0.10536044362292052, frr: 0.26627218934911245 f1-score: 0.6076923076923076

Model Training & Comparison¶

SPATIUM-L1 Implemenation¶

The SPATIUM-L1 algorithm [8, p. 261] (adapted for a faux-K-fold cross-validation and chunking strategy):

- Split corpus into holdout/train sets.

-

Run the following

K = 10times:-

Randomly obtain a

k test/k trainsplit of the main training set in a1/K:(1-1/K)stratified ratio. -

Initialize

threshold_A = 0.975andthreshold_notA = 1.025(in line with the values used by M. Kocher and J. Savoy [8]) -

Initialize

learning_rate = 0.01(arbitrary starting point), which will control how much decision thresholds need to be adjusted on each iteration. -

Initialize

decay_rate = 0.9(arbitrary starting point), which will control how quickly thelearning_ratediminishes on each iteration. -

For each chunk in the

k testset:-

a) Calculate the L1 distance between the chunk and all

other chunks in

k_A. -

b) Calculate the L1 distance between the chunk and all

other chunks in

k_notA. -

d) Calculate

Δ_A(mean distance tok_Achunks) andΔ_notA(mean distance tok_notAchunks). -

e) Apply the decision rule:

-

If

Δ_A / Δ_notA < threshold_A, classify as A -

If

Δ_A / Δ_notA > threshold_notA, classify as ¬A - Otherwise, classify as "don't know"

-

If

-

f) Obtain the FAR and FRR values

- If the absolute difference exceeds 5%, adjust thresholds to converge.

- Otherwise, adjust thresholds to reduce answers of "don't know".

-

a) Calculate the L1 distance between the chunk and all

other chunks in

-

Randomly obtain a

-

Pick optimal values of

threshold_Aandthreshold_notAand run inference on the holdout set.

# Import necessary libraries

import numpy as np

from sklearn.metrics.pairwise import manhattan_distances

import pandas as pd

def apply_m1_decision_rule(delta_A, delta_notA, threshold_A, threshold_notA):

"""Apply the decision rule from M. Kocher and J. Savoy's SPATIUM-L1 algorithm [8] using parameterized thresholds.

- If Δ_A / Δ_notA < `threshold_A`, classify as A

- If Δ_A / Δ_notA > `threshold_notA`, classify as ¬A

- Otherwise, classify as "don't know"

"""

if delta_A / delta_notA < threshold_A:

return 1

elif delta_A / delta_notA > threshold_notA:

return 0

else:

# nan indicating "don't know"

return np.nan

# Declare constants

K = 10 # number of faux folds

# initialize decision thresholds to the same used as those used in

# M. Kocher and J. Savoy's SPATIUM-L1 algorithm [8]

threshold_A=0.975

threshold_notA=1.025

# hyperperamters controlling how aggressively to adjust decision thresholds and how quickly to reduce this rate

learning_rate = 0.01

decay_rate = 0.9

k = 0 # iterator to keep track of current fold

preds = np.array([])

ground_truths = np.array([])

# create a pandas DataFrame to hold metrics and select best thresholds

m1_metrics = pd.DataFrame({'ur': [], 'far': [], 'frr': [], 'learning_rate': [], 'threshold_A': [], 'threshold_notA': []})

while k < K:

print(f"fold {k}")

## Create features/target arrays

fold_k_features, fold_k_target = make_feature_target_tup(m1_X_train_positive, m1_X_train_negative)

## Split into train/test sets on an 4:1 ratio, keeping class proportion roughly the same

fold_k_m1_X_train_positive, fold_k_m1_X_train_negative, fold_k_X_test, fold_k_y_test = train_test_split_m1(

fold_k_features, fold_k_target,

test_size=1/K, # the test set should be 1/Kth the size of the entire dataset

train_size=1-1/K, # the train set should be 1 - 1/Kth the size of the test set

random_state=k # we want different permutations on every 'fold'

)

fold_k_preds = np.array([])

for X in fold_k_X_test:

# Adapted from scikit-learn docs at https://scikit-learn.org/stable/modules/generated/sklearn.metrics.pairwise.manhattan_distances.html [23]

delta_A = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(a, (1, -1)))[0][0] for a in fold_k_m1_X_train_positive])

delta_notA = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(not_a, (1, -1)))[0][0] for not_a in fold_k_m1_X_train_negative])

# End adapted code

# apply decision rule and extend predictions array with new predictions output

fold_k_preds = np.append(fold_k_preds, apply_m1_decision_rule(delta_A, delta_notA, threshold_A, threshold_notA))

# Calculate get the far and frr and adjust thresholds to converge on the eer (operating point at which

# the far and frr are roughly equivalent) [9, p. 2]

preds = np.append(preds, fold_k_preds)

ground_truths = np.append(ground_truths, fold_k_y_test)

ur, far, frr = get_far_and_fer_rates(fold_k_preds, fold_k_y_test)

print(f"ur: {ur}, far: {far}, frr: {frr}")

print(f"learning rate: {learning_rate}, A threshold: {threshold_A}, notA threshold {threshold_notA}")

m1_metrics.loc[len(m1_metrics)] = [ur, far, frr, learning_rate, threshold_A, threshold_notA]

if abs(far - frr) < 0.05:

# acceptance is within 5% of rejection rate

# undecided window can narrow

threshold_A += threshold_A * learning_rate

threshold_notA -= threshold_notA * learning_rate

elif far > frr:

# acceptance is higher than rejection

# acceptance AND rejection thresholds can lower

threshold_A -= threshold_A * learning_rate

threshold_notA -= threshold_notA * learning_rate

elif far < frr:

# acceptance is lower than rejection

# acceptance and rejection thresholds can rise

threshold_A += threshold_A * learning_rate

threshold_notA += threshold_notA * learning_rate

learning_rate = learning_rate * decay_rate

k += 1

fold 0 ur: 0.32098765432098764, far: 0.3191489361702128, frr: 0.02631578947368421 learning rate: 0.01, A threshold: 0.975, notA threshold 1.025 fold 1 ur: 0.31275720164609055, far: 0.30687830687830686, frr: 0.1 learning rate: 0.009000000000000001, A threshold: 0.9652499999999999, notA threshold 1.0147499999999998 fold 2 ur: 0.31275720164609055, far: 0.23163841807909605, frr: 0.08823529411764706 learning rate: 0.008100000000000001, A threshold: 0.9565627499999999, notA threshold 1.0056172499999998 fold 3 ur: 0.2962962962962963, far: 0.19886363636363635, frr: 0.11764705882352941 learning rate: 0.007290000000000001, A threshold: 0.9488145917249999, notA threshold 0.9974717502749998 fold 4 ur: 0.2839506172839506, far: 0.12578616352201258, frr: 0.18604651162790697 learning rate: 0.006561000000000002, A threshold: 0.9418977333513247, notA threshold 0.990200181215495 fold 5 ur: 0.2880658436213992, far: 0.18235294117647058, frr: 0.22727272727272727 learning rate: 0.005904900000000002, A threshold: 0.9480775243798427, notA threshold 0.9966968846044499 fold 6 ur: 0.18106995884773663, far: 0.18461538461538463, frr: 0.14893617021276595 learning rate: 0.005314410000000002, A threshold: 0.9536758273535533, notA threshold 0.9908114891705492 fold 7 ur: 0.12345679012345678, far: 0.23555555555555555, frr: 0.14583333333333334 learning rate: 0.004782969000000002, A threshold: 0.9587440517071992, notA threshold 0.9855459106843864 fold 8 ur: 0.205761316872428, far: 0.2107843137254902, frr: 0.1794871794871795 learning rate: 0.004304672100000002, A threshold: 0.9541584086289493, notA threshold 0.9808320751455062 fold 9 ur: 0.09053497942386832, far: 0.24675324675324675, frr: 0.1896551724137931 learning rate: 0.003874204890000002, A threshold: 0.9582657477095547, notA threshold 0.9766099146768422

import matplotlib.pyplot as plt

# plot the DataFrame columns

plt.figure(figsize=(10, 6))

plt.plot(m1_metrics.index, m1_metrics['ur'], label='Undecided Rate (UR)', marker='o')

plt.plot(m1_metrics.index, m1_metrics['far'], label='False Acceptance Rate (FAR)', marker='x')

plt.plot(m1_metrics.index, m1_metrics['frr'], label='False Rejection Rate (FRR)', marker='s')

# adding labels and title

plt.xlabel('Fold')

plt.ylabel('Rate')

plt.title('Rates over Folds')

plt.legend()

plt.grid(True)

# Display the plot

plt.show()

m1_metrics

| ur | far | frr | learning_rate | threshold_A | threshold_notA | |

|---|---|---|---|---|---|---|

| 0 | 0.320988 | 0.319149 | 0.026316 | 0.010000 | 0.975000 | 1.025000 |

| 1 | 0.312757 | 0.306878 | 0.100000 | 0.009000 | 0.965250 | 1.014750 |

| 2 | 0.312757 | 0.231638 | 0.088235 | 0.008100 | 0.956563 | 1.005617 |

| 3 | 0.296296 | 0.198864 | 0.117647 | 0.007290 | 0.948815 | 0.997472 |

| 4 | 0.283951 | 0.125786 | 0.186047 | 0.006561 | 0.941898 | 0.990200 |

| 5 | 0.288066 | 0.182353 | 0.227273 | 0.005905 | 0.948078 | 0.996697 |

| 6 | 0.181070 | 0.184615 | 0.148936 | 0.005314 | 0.953676 | 0.990811 |

| 7 | 0.123457 | 0.235556 | 0.145833 | 0.004783 | 0.958744 | 0.985546 |

| 8 | 0.205761 | 0.210784 | 0.179487 | 0.004305 | 0.954158 | 0.980832 |

| 9 | 0.090535 | 0.246753 | 0.189655 | 0.003874 | 0.958266 | 0.976610 |

From the chart and data above, two candidate EERs at folds 6 and

8 can be identified, with a range of ~3%. However, fold six at

threshold_A = 0.953676 and

threshold_notA = 0.990811 has lower false labeling

rates. Paired with a somewhat lower indecision rate, these were

chosen as the model's inference parameters.

Now we will run the algorithm with our chosen thresholds on the holdout test set.

# set decision thresholds to the best-performing values from our training loop above

holdout_threshold_A = m1_metrics.loc[6]['threshold_A']

holdout_threshold_notA = m1_metrics.loc[6]['threshold_notA']

# Sort x_holdout and y_holdout based on targets to create profiles [8, p. 260]

# Based on Spataner's answer on Reddit at https://www.reddit.com/r/learnpython/comments/rzw5w6/comment/hrxq8s8/ [24]

sorted_indices = np.argsort(m1_y_holdout)

m1_X_holdout_sorted = m1_X_holdout[sorted_indices]

m1_y_holdout_sorted = m1_y_holdout[sorted_indices]

# Now m1_X_holdout_sorted and m1_y_holdout_sorted are sorted based on the targets

# The first part of the array will contain all the negative samples (0's)

# The second part of the array will contain all the positive samples (1's)

# Find the split point between negative and positive samples

split_point = np.sum(m1_y_holdout_sorted == 0)

# Create profiles for negative and positive samples

m1_X_holdout_negative = m1_X_holdout_sorted[:split_point]

m1_X_holdout_positive = m1_X_holdout_sorted[split_point:]

# End of adapted code

m1_holdout_preds = np.array([])

# Run inference on the negative samples

for i, X in enumerate(m1_X_holdout_negative):

# remove the current test example (q) from the author profile

samples_minus_X = np.concatenate((m1_X_holdout_negative[:i],m1_X_holdout_negative[i+1:]))

# Adapted from scikit-learn docs at https://scikit-learn.org/stable/modules/generated/sklearn.metrics.pairwise.manhattan_distances.html [23]

delta_A = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(a, (1, -1)))[0][0] for a in m1_X_holdout_positive])

delta_notA = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(not_a, (1, -1)))[0][0] for not_a in samples_minus_X])

# End of adapted code

# apply decision rule and extend predictions array with new predictions output

m1_holdout_preds = np.append(m1_holdout_preds, apply_m1_decision_rule(delta_A, delta_notA, holdout_threshold_A, holdout_threshold_notA))

# Run inference on the positive samples

for i, X in enumerate(m1_X_holdout_positive):

# remove the current test example (q) from the author profile

samples_minus_X = np.concatenate((m1_X_holdout_positive[:i],m1_X_holdout_positive[i+1:]))

# Adapted from scikit-learn docs at https://scikit-learn.org/stable/modules/generated/sklearn.metrics.pairwise.manhattan_distances.html [23]

delta_A = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(a, (1, -1)))[0][0] for a in samples_minus_X])

delta_notA = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(not_a, (1, -1)))[0][0] for not_a in m1_X_holdout_negative])

# End of adapted code

# apply decision rule and extend predictions array with new predictions output

m1_holdout_preds = np.append(m1_holdout_preds, apply_m1_decision_rule(delta_A, delta_notA, holdout_threshold_A, holdout_threshold_notA))

# Output FEE metrics

ur, far, frr = get_far_and_fer_rates(m1_holdout_preds, m1_y_holdout_sorted)

print(f"ur: {ur}, far: {far}, frr: {frr}")

ur: 0.4457236842105263, far: 0.027491408934707903, frr: 0.19402985074626866

We get a UR of ~44%, a FAR of ~3%, and an FRR of ~19%.

These numbers are similar, though flipped, to those achieved by the Gaussian Naive Bayes classifier baseline, which had a FAR of ~16% and an FRR of ~3%.

Looking at the actual determinate predictions (those not labeled "don't know") reveals a fairly strong set of predictions.

# cull out the np.nans in preds and the elements at matching indices in ground_truths

holdout_mask = np.isfinite(m1_holdout_preds)

holdout_preds_sans_nan = m1_holdout_preds[holdout_mask]

m1_y_holdout_sorted_sans_nan = m1_y_holdout_sorted[holdout_mask]

viewing_split_point = np.sum(m1_y_holdout_sorted_sans_nan == 0)

real_works_preds = holdout_preds_sans_nan[:viewing_split_point].astype(int)

imposter_works_preds = holdout_preds_sans_nan[viewing_split_point:]

print("Predictions on works by LaSalle (A). 1 means the model predicted that she wrote it.")

print(real_works_preds)

print("\nPredictions on works not by LaSalle (¬A). 0 means the model predicted that she did not write it.")

print(imposter_works_preds)

Predictions on works by LaSalle (A). 1 means the model predicted that she wrote it. [0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] Predictions on works not by LaSalle (¬A). 0 means the model predicted that she did not write it. [1. 1. 0. 1. 1. 1. 1. 1. 1. 0. 0. 1. 1. 1. 0. 1. 1. 1. 1. 1. 1. 1. 1. 0. 1. 1. 0. 1. 1. 1. 1. 1. 0. 0. 1. 0. 1. 1. 1. 0. 1. 1. 1. 1. 1. 1. 0. 0. 1. 1. 0. 1. 1. 1.]

Unfortunately, the undecided rate of 44% is somewhat high.

It is important to note that the baseline (GNB) achieved similar performance without the ability to choose "don't know" as a label.

Let's look at the f1 score.

# Import needed libraries

from sklearn.metrics import f1_score

m2_holdout_f1 = f1_score(m1_y_holdout_sorted_sans_nan, holdout_preds_sans_nan)

print(f"f1-score: {m2_holdout_f1}")

f1-score: 0.7961165048543689

While strong, it should be noted that this score is calculated from the labels (and matching ground truths) that were not predicted as "don't know".

DNN Implementation¶

The DNN algorithm:

- Split corpus into holdout/train sets.

-

Run the following

K = 10times:-

Obtain

k test/k trainfold of the main training set in a1/K:(1-1/K)stratified ratio. -

Initialize model architecture:

- Input layer (size based on feature vector)

- Hidden layers (three dense layers with ReLU activation)

- Dropout layer (rate to be tuned)

- Output layer (1 neuron with sigmoid activation for binary classification)

-

Initialize hyperparameters:

- Loss function: Binary cross-entropy

- Optimizer: Adam

- Batch size: 50 (lower end of those used in the GB-DBN paper due to our smaller corpus)

- Number of epochs: 25 (half that of the low-end used in the GB-DBN paper by the same reasoning)

- Train the model on

k trainset -

Evaluate on

k testset:- Calculate FAR, FRR, and F1-score

- Store metrics for each fold

-

Obtain

- Analyze results across folds to determine optimal dropout rate.

- Train final model with optimal dropout rate on full training set.

- Evaluate final model on holdout set.

Layer architecture and optimizer were chosen through experimentation. Categorical-cross-entropy was tested and found to perform worse. Several variations of deeper networks and larger (more connections) layers were tested, also resulting in lower evaluations on the test sets. The loss function was a natural choice given the binary nature of the problem and the need for confidence scores or continuous outputs representing likelihoods. ReLU activations were somewhat arbitrary but used given their ubiquity of use in such networks. The final sigmoid activation was necessary, given the binary classification problem. A dropout layer was added and tuned to guard against overfitting, given the small dataset.

# Import needed libraries

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout

from tensorflow.keras.losses import binary_crossentropy

from tensorflow.keras.optimizers import Adam

from sklearn.model_selection import StratifiedKFold

import numpy as np

# import warnings

# import tensorflow as tf

# import os

# warnings.filterwarnings('ignore')

# os.environ['TF_CPP_MIN_LOG_LEVEL'] = '0' # or any {'0', '1', '2'}

# tf.get_logger().setLevel('ERROR')

# Declare constants

K = 10 # number of faux folds

# Define the K-fold Cross Validator

kfold = StratifiedKFold(n_splits=K, shuffle=True, random_state=SEED)

k = 0 # iterator to keep track of current fold

# Code adapted from Lucas Eduardo's GitHub article found at https://github.com/christianversloot/machine-learning-articles/blob/main/how-to-use-k-fold-cross-validation-with-keras.md [25]

# Model hyperparameters

no_classes = 1

loss_function = binary_crossentropy

optimizer = "Nadam"

batch_size = 50

no_epochs = 25

dropout_rates = np.linspace(0,0.5,K)

verbosity = 0

# End of adapted code

# create a pandas DataFrame to hold metrics and select best hyperparamters

m2_metrics = pd.DataFrame({'dropout rate': [], 'far': [], 'frr': [], 'f1-score': []})

for train, test in kfold.split(m2_X_train, m2_y_train):

# Define the model architecture

model = Sequential()

model.add(Dense(64, activation='relu', input_shape=(m2_X_train.shape[1],)))

model.add(Dense(128, activation='relu'))

# Droput layer to reduce overfitting

model.add(Dropout(rate=dropout_rates[k], seed=SEED))

model.add(Dense(128, activation='relu'))

model.add(Dense(64, activation='relu'))

# Sigmoid activation for binary classification

model.add(Dense(no_classes, activation='sigmoid'))

# Code adapted from Lucas Eduardo's GitHub article found at https://github.com/christianversloot/machine-learning-articles/blob/main/how-to-use-k-fold-cross-validation-with-keras.md [25]

# Compile the model

model.compile(loss=loss_function,

optimizer=optimizer,

metrics=['Precision', 'Recall'])

# Generate a print

print(f'fold {k}')

# Fit data to model

history = model.fit(m2_X_train[train], m2_y_train[train],

batch_size=batch_size,

epochs=no_epochs,

verbose=verbosity)

# Generate generalization metrics

scores = model.evaluate(m2_X_train[test], m2_y_train[test], verbose=verbosity)

# End of adapted code

truth = m2_y_train[test]

preds = np.round(model.predict(m2_X_train[test], verbose=verbosity).flatten())

_, far, frr = get_far_and_fer_rates(truth, preds)

loss = scores[0]

precision = scores[1]

recall = scores[2]

# Calculate the f1-score with the formula `f1 = 2 * (precision * recall)/(precision + recall)`

f1 = 2 * (precision * recall)/(precision + recall) if precision + recall > 0 else 0

print(f"far: {far}, frr: {frr}, f1-score: {f1}")

m2_metrics.loc[len(m2_metrics)] = [dropout_rates[k], far, frr, f1]

# Increase fold number

k = k + 1

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

fold 0 far: 0.08928571428571429, frr: 0.16666666666666666, f1-score: 0.6956521930676551 fold 1

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.07272727272727272, frr: 0.09090909090909091, f1-score: 0.7826086983563538 fold 2

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.07407407407407407, frr: 0.15384615384615385, f1-score: 0.7500000084853833 fold 3

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.10714285714285714, frr: 0.26666666666666666, f1-score: 0.5833333449231247 fold 4

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.0392156862745098, frr: 0.14285714285714285, f1-score: 0.8333333134651184 fold 5

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.09836065573770492, frr: 0.0, f1-score: 0.6666666666666666 fold 6

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.02127659574468085, frr: 0.21052631578947367, f1-score: 0.8148148324754503 fold 7

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.07272727272727272, frr: 0.1, f1-score: 0.7619047773127652 fold 8

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.058823529411764705, frr: 0.2, f1-score: 0.75 fold 9

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.07142857142857142, frr: 0.0, f1-score: 0.8000000143051146

m2_metrics

| dropout rate | far | frr | f1-score | |

|---|---|---|---|---|

| 0 | 0.000000 | 0.089286 | 0.166667 | 0.695652 |

| 1 | 0.055556 | 0.072727 | 0.090909 | 0.782609 |

| 2 | 0.111111 | 0.074074 | 0.153846 | 0.750000 |

| 3 | 0.166667 | 0.107143 | 0.266667 | 0.583333 |

| 4 | 0.222222 | 0.039216 | 0.142857 | 0.833333 |

| 5 | 0.277778 | 0.098361 | 0.000000 | 0.666667 |

| 6 | 0.333333 | 0.021277 | 0.210526 | 0.814815 |

| 7 | 0.388889 | 0.072727 | 0.100000 | 0.761905 |

| 8 | 0.444444 | 0.058824 | 0.200000 | 0.750000 |

| 9 | 0.500000 | 0.071429 | 0.000000 | 0.800000 |

After running the K-fold algorithm many times (30-40), it was observed that a dropout rate of 0.222222 yielded the best metrics most of the time, with 0.111111 having the best metrics slightly less. One or the other was the top-performing dropout rate, roughly 90% of the time. These top-performing metrics were an f1-score ranging from 0.8 to 0.85 and EERs with ranges between 0.00 and ~0.15.

# Define the model architecture

model = Sequential()

model.add(Dense(64, activation='relu', input_shape=(m2_X_train.shape[1],)))

model.add(Dense(128, activation='relu'))

# Droput layer to reduce overfitting

model.add(Dropout(rate=dropout_rates[4], seed=SEED))

model.add(Dense(128, activation='relu'))

model.add(Dense(64, activation='relu'))

# Sigmoid activation for binary classification

model.add(Dense(no_classes, activation='sigmoid'))

# Code adapted from Lucas Eduardo's GitHub article found at https://github.com/christianversloot/machine-learning-articles/blob/main/how-to-use-k-fold-cross-validation-with-keras.md [25]

# Compile the model

model.compile(loss=loss_function,

optimizer=optimizer,

metrics=['Precision', 'Recall'])

# Fit data to model

history = model.fit(m2_X_train, m2_y_train,

batch_size=batch_size,

epochs=no_epochs,

verbose=verbosity)

# End of adapted code

# Predict the holdout set

m2_holdout_preds = np.round(model.predict(m2_X_holdout, verbose=verbosity).flatten())

_, m2_holdout_far, m2_holdout_frr = get_far_and_fer_rates(m2_y_holdout, m2_holdout_preds)

m2_holdout_loss = scores[0]

m2_holdout_precision = scores[1]

m2_holdout_recall = scores[2]

# Calculate the f1-score with the formula `f1 = 2 * (precision * recall)/(precision + recall)`

m2_holdout_f1 = 2 * (m2_holdout_precision * m2_holdout_recall)/(m2_holdout_precision + m2_holdout_recall)

print(f"far: {m2_holdout_far}, frr: {m2_holdout_frr}, f1-score: {m2_holdout_f1}")

/Users/zacbolton/python/nlp-env-1/lib/python3.11/site-packages/keras/src/layers/core/dense.py:87: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead. super().__init__(activity_regularizer=activity_regularizer, **kwargs)

far: 0.06679389312977099, frr: 0.20134228187919462, f1-score: 0.8000000143051146

These results are interesting and very near our previous model's performance of FAR = ~3%, FRR = ~20%, and F1 score = ~0.80. The only notable difference is a slightly higher FAR at ~7%. It is important to recognize that these results were obtained from a model without the ability to predict a label of "don't know." An EER range from 3% or 7 to 20% is substantial when compared to that obtained from Brocardo et al. at 8.21% to 16.73% [9, p. 2].

This EER obtained by the DNN model is clearly under that obtained by the GNB baseline model of ~11% to ~27%.

The Love Letters¶

We will use our models to predict the likelihood of LaSalle Corbell Pickett's authorship of the love letters attributed to General George E. Pickett.

# Run prediction on the love letters with model 1

# set decision thresholds to the best-performing values from our training loop above

holdout_threshold_A = m1_metrics.loc[6]['threshold_A']

holdout_threshold_notA = m1_metrics.loc[6]['threshold_notA']

m1_Q_preds = np.array([])

# Run inference on the Q chunks

for i, X in enumerate(f1_Q_norm):

# Adapted from scikit-learn docs at https://scikit-learn.org/stable/modules/generated/sklearn.metrics.pairwise.manhattan_distances.html [23]

delta_A = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(a, (1, -1)))[0][0] for a in f1_A_norm])

delta_notA = np.mean([manhattan_distances(np.reshape(X, (1, -1)), np.reshape(not_a, (1, -1)))[0][0] for not_a in f1_notA_norm])

# End of adapted code

# apply decision rule and extend predictions array with new predictions output

m1_Q_preds = np.append(m1_holdout_preds, apply_m1_decision_rule(delta_A, delta_notA, holdout_threshold_A, holdout_threshold_notA))

# Output the percentage of chunks predicted "don't know"

m1_Q_preds_notnan_mask = np.isnan(m1_Q_preds)

print(f"Percent chunks labeled \"don't know\" by model 1: {len(m1_Q_preds[m1_Q_preds_notnan_mask])/len(m1_Q_preds) * 100:.0f}%")

# Output the percentage of chunks predicted to be written by LaSalle

m1_Q_preds_nan_mask = np.isfinite(m1_Q_preds)

m1_Q_decided_preds = m1_Q_preds[m1_Q_preds_nan_mask]

print(f"Percent chunks labeled as written by LaSalle (1) by model 1: {np.sum(m1_Q_decided_preds == 1)/len(m1_Q_preds) * 100:.0f}%")

# Output the percentage of chunks predicted not to be written by LaSalle

print(f"Percent chunks labeled as not written by LaSalle (0) by model 1: {np.sum(m1_Q_decided_preds == 0)/len(m1_Q_preds) * 100:.0f}%")

Percent chunks labeled "don't know" by model 1: 44% Percent chunks labeled as written by LaSalle (1) by model 1: 8% Percent chunks labeled as not written by LaSalle (0) by model 1: 47%

# Run prediction on the love letters with model 2

# Predict the holdout set

m2_Q_preds = np.round(model.predict(np.array(F_Q_norm), verbose=verbosity).flatten())

# Output the percentage of chunks predicted to be written by LaSalle

print(f"Percent chunks labeled as written by LaSalle (1) by model 2: {np.sum(m2_Q_preds == 1)/len(m2_Q_preds) * 100:.0f}%")

# Output the percentage of chunks predicted not to be written by LaSalle

print(f"Percent chunks labeled as not written by LaSalle (0) by model 2: {np.sum(m2_Q_preds == 0)/len(m2_Q_preds) * 100:.0f}%")

Percent chunks labeled as written by LaSalle (1) by model 2: 48% Percent chunks labeled as not written by LaSalle (0) by model 2: 52%

Results¶

Both models agree, though weakly, that the letters were not written by LaSalle Corbell Pickett. The SPATIUM-L1 inspired model (model 1) classified 47% of the chunks as not written by LaSalle, compared to only 8% as written by her, with 44% labeled as "don't know". The DNN model (model 2) showed a slightly more balanced but still negative attribution, classifying 52% of the chunks as not written by LaSalle and 48% as written by her. While these results are not conclusive, they suggest a tendency toward rejecting LaSalle's authorship of the love letters. However, the high uncertainty in model 1 and the close split in model 2 indicate that this conclusion should be treated with caution.

Conclusions¶

Performance Analysis & Comparative Discussion¶

The two models implemented in this study, the SPATIUM-L1 variant and the Deep Neural Network (DNN), showed comparable performance in the authorship verification task, but with some notable differences.

The SPATIUM-L1 variant achieved an Equal Error Rate (EER) range of ~3% to ~19%, with a False Acceptance Rate (FAR) of ~3% and a False Rejection Rate (FRR) of ~19%. The model also had a high undecided rate of 44%, indicating a conservative approach to classification. When making determinate predictions, SPATIUM-L1 achieved a strong F1 score of 0.796.

The DNN model, on the other hand, achieved an EER range of 7% to 20%, with a FAR of ~7% and an FRR of ~20%. Unlike SPATIUM-L1, the DNN model did not have an undecided option, forcing a decision for every input. The DNN achieved an F1 score of 0.800, slightly higher than SPATIUM-L1's determinate predictions.

Both models outperformed the Gaussian Naive Bayes (GNB) baseline trained on the DNN feature set, which had an EER range of 11% to 27% and an F1 score of 0.608. They also outperformed the GNB trained on the SPATIUM-L1 inspired feature set which achieved an F1 score of 0.710. Its EER range was slightly more favorable though, outperforming both main models with a range from ~3% to ~16%.

To visualize the performance comparison:

generate_model_performance_plot() # See Appendix D

The statistical SPATIUM-L1 model offers interpretability, with its smaller and human interpretable feature set, and the ability to abstain from decisions when uncertain. This can be advantageous in high-stakes scenarios where false positives or negatives could have serious consequences [8, p. 262]. The model's performance on determinate predictions suggests it makes high-quality decisions when confident.

The DNN model demonstrates the power of deep learning in capturing complex stylometric patterns, such as character/word n-grams and vocabulary richness. Its slightly higher F1 score indicates better overall classification performance but at the cost of interpretability and the ability to say, "I don't know."

The choice between these models may depend on the specific application. When explainability is crucial, such as forensics and court cases, SPATIUM-L1 may be preferred. For applications prioritizing overall accuracy and where indecision is less desirable, the DNN model could be more suitable.